Abstract

The Rosenberg Self-Esteem Scale (RSES) is a widely used measure for assessing self-esteem, but its factor structure is debated. Our goals were to compare 10 alternative models for the RSES and to quantify and predict the method effects. This sample involves two waves (N =2,513 9th-grade and 2,370 10th-grade students) from five waves of a school-based longitudinal study. The RSES was administered in each wave. The global self-esteem factor with two latent method factors yielded the best fit to the data. The global factor explained a large amount of the common variance (61% and 46%); however, a relatively large proportion of the common variance was attributed to the negative method factor (34 % and 41%), and a small proportion of the common variance was explained by the positive method factor (5% and 13%). We conceptualized the method effect as a response style and found that being a girl and having a higher number of depressive symptoms were associated with both low self-esteem and negative response style, as measured by the negative method factor. Our study supported the one global self-esteem construct and quantified the method effects in adolescents.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Self-esteem is the evaluative component of an individual’s self-concept, which is associated with overall health, well-being (DuBois & Flay, 2004), and even mortality (Stamatakis et al., 2004). The Rosenberg Self-Esteem Scale (RSES; Rosenberg, 1965) is the most widely used instrument for measuring global self-esteem and has been translated into numerous languages (Schmitt & Allik, 2005). Having the advantages of a long history of use, an uncomplicated language, and brevity, the RSES is a convenient and, thus, popular device for measuring self-esteem, especially in large, population-based quantitative studies (e.g., Swallen, Reither, Haas, & Meier, 2005).

Although self-esteem was originally conceptualized as a one-dimensional construct (Rosenberg, 1965), there is an ongoing debate about the factor structure of the RSES that is substantively important, influencing the interpretation of responses and also the construct validity of global self-esteem (Corwyn, 2000). Although the scale is routinely handled as a one-factor measure, some exploratory and confirmatory factor analyses (CFAs) have resulted in two oblique factors implying two meaningful dimensions: one of positive and one of negative images of self (Mimura & Griffiths, 2007; Owens, 1994; Roth, Decker, Herzberg, & Brähler, 2008). Furthermore, an alternative two-factor model was proposed that includes self-acceptance and self-assessment factors based on a theoretical consideration (Tafarodi & Milne, 2002). On the other hand, some studies have reported one main factor following reversed recoding of negatively worded items (Schmitt & Allik, 2005). Many researchers have assumed that the two-factor solution is owed merely to the method effect being derived from the item wording and, thus, the scale includes only one substantive dimension (Carmines & Zeller, 1979; Corwyn, 2000; DiStefano & Motl, 2009; Greenberger, Chen, Dmitrieva, & Farruggia., 2003; Horan, DiStefano, & Motl, 2003; Marsh, 1996; Marsh, Scalas, & Nagengast, 2010; Tomás & Oliver, 1999; Wu, 2008).

Method effect refers to variance that is attributable to the method of measurement—in this case, negative and positive wording of the items—rather than to the construct of interest (Fiske, 1982; Podsakoff, MacKenzie, Lee, & Podsakoff, 2003). Unlike exploratory factor analysis, CFA provides an opportunity to compare alternative measurement models and also to specify the method effects (Brown, 2006). Two different approaches are used to model the method effects within a confirmatory analysis framework (Lindwall et al., 2012). One approach is correlated traits–correlated uniqueness (CTCU), which models the method effects with the error covariances; the other is correlated traits–correlated methods (CTCM), which models the method effects by implying one or more latent method factors. In four studies, it has been revealed that the one-factor structure with method effect modeled by the covariances between positively worded items yields the best fit among other competing models (Aluja, Rolland, García, & Rossier, 2007; Dunbar, Ford, Hunt, & Der, 2000; Martin-Albo, Núñez, Navarro, & Grijalvo, 2007; Wang, Siegal, Falck, & Carlson, 2001). Only one study has demonstrated that the best fit is yielded by one global self-esteem factor and correlated uniqueness between negatively worded items and between positively worded items (Vasconcelos-Raposo, Fernandes, Teixeira, & Bertelli, 2011); however, because of an identification problem, some minor modification was needed. In favor of CTCM models, a recent study involving a large number of alternative models has provided support for the model with one global self-esteem factor and two latent method factors in a sample of boys (Marsh et al., 2010). This study was based on an exclusively male sample, however, so more research is needed to evaluate whether gender does, indeed, affect method factors. The CTCM approach has been bolstered by other previous studies supporting one global self-esteem and one latent method factor from the negatively worded items (DiStefano & Motl, 2009). Furthermore, Tafarodi and Milne (2002) have proposed a five-factor model, which includes their new theoretical factors (self-acceptance and self-assessment), global self esteem, and two method factors.

The advantage of CTCM models is that the method effects can be quantified and predicted by other variables, something that is not possible when the CTCU model is used (Lindwall et al., 2012). A significant question regarding method effect is whether it merely reflects systematic measurement errors or whether it represents response styles (DiStefano & Motl, 2006; Lindwall et al., 2012; Quilty, Oakman, & Risko, 2006). Response style is defined as “a personality trait that involves the predisposition toward interpreting and endorsing items based on a certain tone or valence” (DiStefano & Motl, 2009, p. 310).

In recent research, method effects associated with negatively phrased items have been treated as a response style and as correlated or predicted by other variables (DiStefano & Motl, 2006, 2009; Lindwall et al., 2012; Quilty et al., 2006). A response style occurring with the negatively phrased items is positively associated with depression (Lindwall et al., 2012) reward responsiveness in women only (DiStefano & Motl, 2009) and negatively associated with life satisfaction (Lindwall et al., 2012), conscientiousness, and emotional stability (Quilty et al. 2006), a tendency toward risk-taking behaviors in both genders, fear of negative evaluation, and private self-consciousness only in women (DiStefano & Motl, 2009). One study demonstrated that response style linked with positively worded items is associated with life satisfaction (Lindwall et al., 2012). These studies supported the proposition that the negative and, probably, the positive response styles can be affected by personality traits and demographic factors, and it is worth examining their associations in order to clarify whether self-esteem per se, response style, or both are associated with other constructs and variables. For example, the question might be whether gender difference in self-esteem reflects differences in the evaluative component of self-concept or whether it only reflects different response styles. In the first case, gender difference should be present when response style is statistically controlled; in the second case, when controlling response style, gender difference should disappear, and response style should be different in boys and girls.

The goals of this report are threefold. The first goal is to contrast the competing measurement models depicted in Fig. 1 in order `to identify the best-fitting model in a large adolescent sample. This comparison was informative for at least two reasons. On the one hand, we could contrast the global one-factor model with a two-factor model including negative and positive aspects of self-esteem. On the other hand, we could also contrast the original global self-esteem approach with the two-factor approach including the acceptance and assessment factors and also with the five-factor model proposed by Tafarodi and Milne (2002). The second goal is to test the longitudinal stability of the measurement model of self-esteem in order to make valid conclusions regarding the temporal change in self-esteem. The third goal is to quantify the size and stability of method effects due to positive and negative wording of items, using the explained common variance (ECV) approach, and to test whether gender, depressive symptoms, average grade, and subjective academic performance predict method effects in the RSES.

Method

Participants and procedure

This analysis involved two waves (second and third) from a school-based longitudinal study. The two-stage cluster sampling method is described in more detail elsewhere (Urbán, 2010). The second wave (between March and May 2009) comprised 2,513 9th-grade adolescents (51% girls; mean age = 15.7 years, SD = 0.55), and the third wave (between October and December 2009) comprised 2,370 10th-grade adolescents (52% girls; mean age = 16.4 years, SD = 0.68). A total of 1,857 adolescents participated in both waves. The average time between the two waves was 5.9 months.

Instruments

Self-esteem scale

The Hungarian version of the RSES (RSES–HU; Elekes, 2009) was administered in two forms. This scale contains five positively and five negatively worded items. In the first form, positively worded items were 1, 3, 4, 7, and 10. In the second form, these items were 1, 2, 4, 6, and 7. The first form was administered in wave 2, and the second form was used in wave 3. The items on the scale are listed in Table 2. The internal consistency of the scale was adequate in both waves (α = .87 in wave 2, and α = .86 in wave 3) and in both genders (in boys, α = .87 in both waves; in girls, α =.86 in both waves). Test–retest correlation of RSES–HU in this study was excellent (r = .67).

Depressive symptoms

The Hungarian version of the Centre for Epidemiological Studies Depression scale (CES–D; Radloff, 1977) was used to measure depressive symptoms recorded during the past week. The CES–D consists of 16 negative affect and 4 positive affect items, such as “I felt depressed,” “I felt tearful,” and “I enjoyed life.” Participants had to answer how often they had felt this way in the past week on a 4-point scale ranging from 1 to 4, with overall scores ranging between 20 and 80. Positive affect items were reversed when the sum score of the scale was computed. CES–D is widely used to assess depressive symptoms in nonclinical adolescent and adult populations. The internal consistency of the scale was adequate (α = .82 in wave 2 and .80 in wave 3). Test–retest correlation in this study was excellent (r = .61).

Academic performance variables

One question was constructed to measure average grades during the last semester. Self-reported average grades reflect adequately the objective average grade, even though the validity of the self-reported value is somewhat lower in students with a lower average grade. In general, the correlation between self-reported average grade and the objective value is r = .82, as estimated in a meta-analysis (Kuncel, Credé, & Thomas, 2005). Another question was used to measure relative performance in school, as compared with other students. A 5-point scale was provided to answer this question, ranging from 1 (far above the average) to 5 (far below the average). Due to the negative direction of this scale, we call this a measure of relative underachievement in school.

Data analysis strategy

We used structural equation modeling with Mplus 7.0 to estimate the degree of fit of ten prior measurement models to the data in both waves. We performed all analyses with maximum likelihood parameter estimates with standard errors and chi-square test statistics that were robust to nonnormality and nonindependence of observation (Muthén & Muthén, 1998–2007, p. 484). We used the full information maximum likelihood estimator to deal with missing data (Muthén & Muthén, 1998–2007).

A satisfactory degree of fit requires the comparative fit index (CFI) and the Tucker–Lewis Index (TLI) to be higher than or close to .95 (Brown, 2006). The next fit index was the root mean squared error of approximation (RMSEA). An RMSEA below .05 indicates excellent fit, and a value above .10 indicates poor fit. Closeness of model fit using RMSEA (CFit of RMSEA) is a statistical test (Browne & Cudeck, 1993) that evaluates the statistical deviation of RMSEA from the value .05. Nonsignificant probability values (p > .05) indicate acceptable model fit (Brown, 2006). The last fit index is the standardized root mean square residual (SRMR). An SRMR value below .08 is considered a good fit (Kline, 2011). The Aikaike information criterion (AIC) was used in cases of comparison of nonnested models; a model with a lower AIC value is regarded as fitting the data better in relation to alternative models (Brown, 2006).

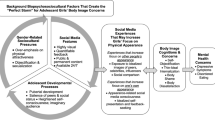

In order to quantify the size and stability of method effects due to positive and negative wording of items, we applied a longitudinal CFA model (see Fig. 2; Little, 2013; Vandenberg & Lance, 2000). In addition, to identify the correlates of the main factor and the method factors, we applied another longitudinal CFA with covariates model (see Fig. 3).

Results

Comparing measurement models

In order to compare alternative models, we tested 10 measurement models of self-esteem in both waves, including (1) one trait factor with no correlated uniqueness; (2) two correlating trait factors: positive and negative trait factors; (3) two correlating trait factors: one acceptance and one assessment factor, as proposed by Tafarodi and Milne (2002); (4) one trait factor with correlated uniqueness among both positive and negative items; (5) one trait factor with correlated uniqueness among negatively worded items; (6) one trait factor with correlated uniqueness among positively worded items; (7) one trait factor and positive and negative latent method factors; (8) one trait factor plus a negative method factor; and (9) one trait factor plus a positive method factor. Finally, (10) we tested a five-factor model proposed by Trafordi and Milne, which included one trait factor, one acceptance, one assessment factor, and positive and negative latent method factors. The fit indices for each model are presented in Table 1.

Only three models (models 6, 7, and 10) satisfied our predefined decision criteria (CFI > .95, TLI > .95, RMSEA ≤ .05, and SRMR < .08) in both waves (see Table 1). Model 10, the five-factor model, yielded the closest model fit in both waves; however, in the first wave, the negative method factor and the acceptance factor did not have any significant factor loadings, and the assessment factor had only three significant loadings, and in the second wave, the acceptance factor did not have any significant loadings, and the assessment factor had only one significant loading. Therefore, the interpretability of these factors is unclear. We can conclude that our data do not support the five-factor model.

The model (model 7 in Table 1) containing one trait factor and positive and negative latent method factors also yielded an excellent fit to the data in both waves. In this model, all three factors were also identified by significant factor loadings. The degrees of fit of model 7 (in Table 1) and model 6 are, however, quite close in the single wave analyses. We also performed a longitudinal CFA, in which the models included the same measurement model at both assessment points and the related latent variables and the error of the same items from two time points were allowed to freely covariate (see, e.g., Fig. 2). The degree of fit of both models was acceptable [model 6, χ 2(139) = 631.8, RMSEA = .035 Cfit = 1.00, CFI = .97 TLI = .95 SRMR = .065, AIC = 91105; model 7, χ 2(137) = 402.7, RMSEA = .026 Cfit = 1.00, CFI = .98 TLI = .97 SRMR = .035, AIC = 90820]. Model 7, however, performed at a somewhat better fit than did model 6. In sum, model 7 provided a somewhat better solution of the measurement model of the RSES, but the difference of degree of fit was very moderate.

The factor loadings of model 7 are presented in Table 2. The loadings are very similar in both waves. In order to quantify the degree of unidimensionality of the RSES, we applied the percentage of common variance attributable to the global factor with the use of the ECV; index (Bentler, 2009; Berge & Sočan, 2004). The ECV of the global factor was 61% in wave 2 and 46% in wave 3, supporting the theory that the majority of variance is explained by the global self-esteem factor; however, a relatively large proportion of ECV was attributed to the negative method factor (34% and 41%), and a small proportion of common variance was explained by the positive method factor (5% and 13%).

Longitudinal CFA model: measurement invariance and temporal stability

In order to test temporal stability or test–retest correlation of the global self-esteem and response style measured by positive method and negative method factors, we tested a longitudinal CFA model (see Fig. 2). The degree of fit was excellent [χ 2(137) = 402.7, RMSEA = .026 Cfit = 1.00, CFI = .982 TLI = .975 SRMR = .032]. Before further analysis, we tested the longitudinal measurement invariance hypothesis with a series of longitudinal CFAs. Fit indices and their difference tests are reported in Table 3. The configural invariance model that does not contain any constraints yielded excellent degree of fit. We applied increasing equality constraints to test the longitudinal invariance. To compare the nested models with increasing constraints we used the traditional Δχ 2-test, and we followed the recommendations of Cheung and Rensvold (2002) and Chen (2007) for comparing two nested models, who suggested cutoff values at ΔCFI ≤ .01 and ΔRMSEA ≤ .015. Testing the metric invariance of the global self-esteem factor, we constrained appropriate factor loadings to be equal at both time points. Although the conservative χ 2-difference test indicated significant decrement in degree of fit, the changes in CFI and RMSEA were less than the cutoff values. We also tested metric invariance of method factors applying equality constraints on factor loadings of adequate items, which also yielded a significant change in the χ 2-difference test, and the change in CFI was larger than the cutoff value, but as for RMSEA, this change was still less than the cutoff value. Therefore, the metric invariance conclusion of method factors could not be supported. To test the scalar invariance, we constrained the intercepts of the same items to be equal. Again, the conservative χ 2-difference test indicated significant decrement in degree of fit, but the changes in CFI and RMSEA were less than the cutoff values. These results supported the longitudinal scalar invariance of the global self-esteem factor of the RSES but called into questione the metric invariance of method factors, which is likely to be due to the different order of items in the two waves.

The test–retest correlation of global self-esteem was .74 (p < .0001; the test–retest correlations of method factors were .41 (p < .0001) for the negative and .48 (p < .0001) for the positive method factors. We also tested the equality of latent means in the current longitudinal model, and fixing the two means to be equal resulted in a significant increase in the χ 2 value, Satorra–Bentler scaled Δχ 2(1) = 5.9, p < .016; however, other fit indices did not change (ΔRMSEA = .000, ΔCFI = .001). Therefore, by using newly proposed criteria, we can support the temporal stability of the latent mean of the global self-esteem factor.

Correlates of global and method effects: determinants of response style

In order to understand the covariates of global and method effects, we applied longitudinal CFA with the covariates approach, which is depicted in Fig. 3. We performed the analysis in the total sample and also by gender. The fit indices of the three models were satisfactory [in total sample, χ 2(253) = 634.3, RMSEA = .034, Cfit = 1.00, CFI = .965, TLI = .954, SRMR = .053; in boys, χ 2(239) 386.9, RMSEA = .033, Cfit = 1.00, CFI = .965, TLI = .954, SRMR = .063; in girls, χ 2(239) = 444.6, RMSEA = .035, Cfit = 1.00, CFI = .965, TLI = .954, SRMR = .055]. The standardized regression coefficients are presented in Table 4. Gender was negatively associated with global self-esteem, with girls scoring lower on global self-esteem. Gender was positively associated with negative method effect, which highlighted that girls were more likely to endorse negatively worded items. Depression score was negatively associated with global self-esteem and positively associated with negative method factor. Adolescents with a higher depression score were more likely to attain a higher score on negatively phrased items and, therefore, more likely to endorse these items. These associations were present in the boys and girls separately. School grade was not associated with either global self-esteem or negative method effect in both waves and was linked with positive method effects only in girls in wave 3. Relative underachievement in school was negatively related to self-esteem in the total sample.

Discussion

Our study supported the model that included one global self-esteem factor and two latent method factors for the RSES in a large sample of Hungarian adolescents. We compared several different models and, similarly to an earlier study (Marsh et al., 2010), the global self-esteem model with positive and negative method factors yielded a superior degree of fit. However, other measurement models also had an acceptable level of model fit, and the model containing a trait factor and correlated uniqueness among positively worded items—an example of CTCU models—had a degree of model fit very close to our chosen model with one global self-esteem factor and two latent method factors that can be regarded as a CTCM model. Recent recommendations regarding the use of CTCM and CTCU models concluded that the CTCM model is generally the preferred model and that the CTCU model should be applied only when the CTCM model fails (Lance, Noble, & Scullen, 2002). A simulation study presented evidence that CTCU models would imply biased estimation of trait factor loadings when the method factor loadings are high (Conway, Lievens, Scullen, & Lance, 2004). In the present case, the sizes of factor loadings of method factors are in the medium or large range; therefore, a CTCU model would be less appropriate.

Our result not only provides evidence of the factorial structure of the RSES in an adolescent population, but also supports the theory that self-esteem as measured by the RSES should be viewed as a global and one-dimensional construct that can be defined as a positive or negative attitude toward the self (Rosenberg, 1965) or simply as a favorable global evaluation of oneself (Baumeister, Smart, & Boden, 1996).

Although the unidimensionality of the RSES is supported in this study, a large proportion of common variance is explained by the method effect, due to negatively and positively phrased items. Because the size of method effect is not negligible (36% and 54%), it may have an impact on the reliability of the measurement of self-esteem. Several previous studies have reported method effects in adolescents, young adults, and older samples (DiStefano & Motl, 2006, 2009; Lindwall et al., 2012; Marsh et al., 2010; Quilty et al., 2006); however, in this study, we quantified these effects, using an estimation of the proportion of common method variance due to method effects, and found that while the method effects related to positively worded items explain only a small amount, method effects linked to negatively worded items explain a large proportion of common variance. This result is also in line with earlier research that placed emphasis mainly on method effect related to negatively phrased items (DiStefano & Motl, 2009). We also found that the degree of method effect also depends on the order of the items. Due to the fact that we used the same items in different orders in two waves, we found that the explained common variances of method effects were different. Further research should clarify the role of item order in method effects. Although method effects related to wording of the items are regarded as a source of bias (Podsakoff, MacKenzie, & Podsakoff, 2012; Podsakoff et al., 2003), they also provides the possibility of grasping a stable personality trait—namely, the style of response to positively and negatively worded items.

We also tested the longitudinal stability of the measurement model. For longitudinal studies, it is important to demonstrate the longitudinal alpha, beta, and gamma change (Chan, 1998). Alpha change refers to true score change in the given construct such as self-esteem, beta change refers to change when the measurement properties of indicators change over time, and finally, gamma change refers to the situation where the construct changes over time. On the basis of a longitudinal CFA approach, we demonstrated the temporal stability of the global self-esteem factor; however, the factor loadings of the method factors are not invariant in time. While our result is consistent with that of a previous study (Motl & DiStefano, 2002), it is still not known whether the construct of self-esteem changes over a longer period of time.

We found that the method effects due to different affective valences of the items are relatively stable. In this study, we provided evidence for the temporal stability of response style, demonstrating the moderate test–retest correlations of latent method factors through a 6-month follow-up. This result is in line with research on the stability of response style (Motl & DiStefano, 2002; Weijters, Geuens, & Schillewaert, 2010), which presented evidence suggesting that response styles have an important stable component. Furthermore, in this study, we also demonstrated that the response style related to negatively worded items is associated with gender and depressive symptoms; this result is also in line with a previous study of older samples (Lindwall et al., 2012). This and previous studies (Lindwall et al., 2012) equivocally demonstrated that research participants with higher depressive symptoms are more likely to endorse negatively worded items; another study also reported that people with higher avoidance motivation and neuroticism are more likely to endorse negative items (Quilty et al., 2006). In comparison, one study reported that individuals with a higher score for the self-consciousness trait are less likely to show method effects. The evidence that response style is a stable characteristic and can be predicted by other variables supports the idea that response style can be regarded as a personality trait (e.g., DiStefano & Motl, 2006; Horan et al., 2003; Lindwall et al., 2012). Global self-esteem is associated with a school-related variable. Relative underachievement in school is negatively related to global self-esteem.

The main limitation of this study is that the present sample involved only urban adolescents with a narrow age range; therefore, the generalizability to rural and minority adolescents and also to adults is limited. On the other hand, one of the strengths of this study is that it included two waves of data; therefore, we could test the longitudinal stability of global and method effects in a relatively large representative sample of adolescents.

References

Aluja, A., Rolland, J. P., García, L. F., & Rossier, J. (2007). Dimensionality of the Rosenberg Self-Esteem Scale and its relationships with the three- and the five-factor personality models. Journal of Personality Assessment, 88, 246–249.

Baumeister, R., Smart, L., & Boden, J. (1996). Relation of threatened egotism to violence and aggression: The dark side of self-esteem. Psychological Review, 103, 5–33.

Bentler, P. (2009). Alpha, dimension-free, and model-based internal consistency reliability. Psychometrika, 74(1), 137–143.

Berge, J. M. F., & Sočan, G. (2004). The greatest lower bound to the reliability of a test and the hypothesis of unidimensionality. Psychometrika, 69(4), 613–625.

Brown, T. A. (2006). Confirmatory factor analysis for applied research. New York: Guilford Press.

Browne, M. V., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 136–162). Newbury Park, CA: Sage.

Carmines, E. G., & Zeller, R. A. (1979). Reliability and validity assessment. Beverly Hills, CA: Sage.

Chan, D. (1998). The conceptualization and analysis of change over time: An integrative approach incorporating longitudinal mean and covariance structures analysis and multiple indicator latent growth modeling. Organizational Research Methods, 1, 421–483.

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Structural Equation Modeling, 14, 464–504.

Cheung, G. W., & Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Structural Equation Modeling, 9, 233–255.

Conway, J. M., Lievens, F., Scullen, S. E., & Lance, C. E. (2004). Bias in the correlated uniqueness model for MTMM data: A simulation study. Structural Equation Modeling, 11, 535–559.

Corwyn, R. F. (2000). The factor structure of global self-esteem among adolescents and adults. Journal of Research in Personality, 34, 357–379.

DiStefano, C., & Motl, R. W. (2006). Further investigating method effects associated with negatively worded items on self-report surveys. Structural Equation Modeling, 13, 440–464.

DiStefano, C., & Motl, R. W. (2009). Personality correlates of method effects due to negatively worded items on the Rosenberg Self-Esteem Scale. Personality and Individual Differences, 46, 309–313.

DuBois, D. L., & Flay, B. R. (2004). The healthy pursuit of self-esteem: Comment on and alternative to the Crocker and Park (2004) Formulation. Psychological Bulletin, 130, 415–420.

Dunbar, M., Ford, G., Hunt, K., & Der, G. (2000). Question wording effects in the assessment of global self-esteem. European Journal of Psychological Assessment, 16, 13–19.

Elekes, Zs. (2009). Egy változó kor változó ifjúsága. Fiatalok alkohol- és egyéb drogfogyasztása Magyarországon – ESPAD 2007. [Changing youth in changing times. Alcohol and other substance use among school children in Hungary – ESPAD 2007] Budapest: L'Harmattan Kiadó.

Fiske, D. W. (1982). Convergent–discriminant validation in measurements and research strategies. In D. Brinbirg & L. H. Kidder (Eds.), Forms of validity in research (pp. 77–92). San Francisco: Jossey-Bass.

Greenberger, E., Chen, C., Dmitrieva, J., & Farruggia, S. P. (2003). Item-wording and the dimensionality of the Rosenberg Self-Esteem Scale: do they matter? Personality and Individual Differences, 35, 1241–1254.

Horan, P. M., DiStefano, C., & Motl, R. W. (2003). Wording effects in self-esteem scales: Methodological artifact or response style? Structural Equation Modeling, 10, 435–455.

Kline, R. B. (2011). Principles and practice of structural equation modeling (3rd ed.). New York, NY: Guilford Press.

Kuncel, N. R., Credé, M., & Thomas, L. L. (2005). The validity of self-reported grade point average, class ranks, and test scores: A meta-analysis and review of the literature. Review of Educational Research, 75, 63–82.

Lance, C. E., Noble, C. L., & Scullen, S. E. (2002). A critique of the correlated trait-correlated method and correlated uniqueness models for multitrait-multimethod data. Psychological Methods, 7, 228–244.

Lindwall, M., Barkoukis, V., Grano, C., Lucidi, F., Raudsepp, L., Liukkonen, J., & Thøgersen-Ntoumani, C. (2012). Method Effects: The Problem With Negatively Versus Positively Keyed Items. Journal of Personality Assessment, 94, 196–204.

Little, T. D. (2013). Longitudinal Structural Equation Modeling. New York: Guilford Press.

Marsh, H. W. (1996). Positive and negative global self-esteem: A substantively meaningful distinction or artifacts? Journal of Personality and Social Psychology, 70, 810–819.

Marsh, H. W., Scalas, L. F., & Nagengast, B. (2010). Longitudinal tests of competing factor structures for the Rosenberg Self-Esteem Scale: Traits, ephemeral artifacts, and stable response styles. Psychological Assessment, 22, 366–381.

Martín-Albo, J., Núñez, J. L., Navarro, J. G., & Grijalvo, F. (2007). The Rosenberg Self-Esteem Scale: translation and validation in university students. The Spanish Journal of Psychology, 10, 458–467.

Mimura, C., & Griffiths, P. (2007). A Japanese version of the Rosenberg Self-Esteem Scale: translation and equivalence assessment. Journal of Psychosomatic Research, 62, 589–594.

Motl, R. W., & DiStefano, C. (2002). Longitudinal invariance of self-esteem and method effects associated with negatively worded items. Structural Equation Modeling, 9, 562–578.

Muthén, L. K., & Muthén, B. O. (1998–2007). Mplus user’s guide (5th ed.). Los Angeles, CA: Author.

Owens, T. J. (1994). Two dimensions of self-esteem: Reciprocal effects of positive self-worth and self-deprecation on adolescent problems. American Sociological Review, 59, 391–407.

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88, 879–903.

Podsakoff, P. M., MacKenzie, S. B., & Podsakoff, N. P. (2012). Sources of method bias in social science research and recommendations on how to control it. Annual Review of Psychology, 65, 539–569.

Quilty, L. C., Oakman, J. M., & Risko, E. (2006). Correlates of the Rosenberg Self-Esteem Scale method effects. Structural Equation Modeling, 13, 99–117.

Radloff, L. S. (1977). The CES–D Scale: A self-report depression scale for research in the general population. Applied Psychological Measurement, 1, 385–401.

Rosenberg, M. (1965). Society and the adolescent self-image. Princeton, NJ: Princeton University Press.

Roth, M., Decker, O., Herzberg, P. Y., & Brähler, E. (2008). Dimensionality and norms of the Rosenberg Self-Esteem Scale in a German general population sample. European Journal of Psychological Assessment, 24, 190–197.

Schmitt, D. P., & Allik, J. (2005). Simultaneous administration of the Rosenberg Self-Esteem Scale in 53 nations: Exploring the universal and culture-specific features of global self-esteem. Journal of Personality and Social Psychology, 89, 623–642.

Stamatakis, K. A., Lynch, J., Everson, S. A., Raghunathan, T., Salonen, J. T., & Kaplan, G. A. (2004). Self-esteem and mortality: Prospective evidence from population-based study. Annals of Epidemiology, 14, 56–85.

Swallen, K. C., Reither, E. N., Haas, S. A., & Meier, A. M. (2005). Overweight, obesity, and health-related quality of life among adolescents: The National Longitudinal Study of Adolescent Health. Pediatrics, 115, 340–347.

Tafarodi, R. W., & Milne, A. B. (2002). Decomposing global self-esteem. Journal of Personality, 70, 443–483.

Tomás, J. M., & Oliver, A. (1999). Rosenberg’s Self-Esteem Scale: Two factors or method effects. Structural Equation Modeling, 6, 84–98.

Urbán, R. (2010). Smoking outcome expectancies mediate the association between sensation seeking, peer smoking, and smoking among young adolescents. Nicotine and Tobacco Research, 12, 59–68.

Vandenberg, R. J., & Lance, C. E. (2000). A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods, 3, 4–69.

Vasconcelos-Raposo, J., Fernandes, H. M., Teixeira, C. M., & Bertelli, R. (2011). Factorial validity and invariance of the Rosenberg Self-Esteem Scale among Portuguese youngsters. Social Indicator Research, Advance online publication.. doi:10.1007/s11205-011-9782-0

Wang, J., Siegal, H. A., Falck, R. S., & Carlson, R. G. (2001). Factorial structure of Rosenberg’s Self-Esteem Scale among crack-cocaine drug users. Structural Equation Modeling, 8, 275–286.

Weijters, B., Geuens, M., & Schillewaert, N. (2010). The stability of individual response styles. Psychological Methods., 15, 96–110.

Wu, C.-H. (2008). An examination of the wording effect in the Rosenberg Self-Esteem Scale among culturally Chinese people. The Journal of Social Psychology, 148, 535–551.

Acknowledgements

This publication was supported by Grant 1 R01 TW007927-01 from the Fogarty International Center, the National Cancer Institute, and the National Institute on Drug Abuse, within the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official view of the NIH. The project was also supported by the European Union and the European Social Fund under Grant Agreement TÁMOP 4.2.1./B-09/1/KMR-2010-0003. Zsolt Demetrovics and Gyöngyi Kökönyei acknowledge the financial support of the János Bolyai Research Fellowship, awarded by the Hungarian Academy of Sciences.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Urbán, R., Szigeti, R., Kökönyei, G. et al. Global self-esteem and method effects: Competing factor structures, longitudinal invariance, and response styles in adolescents. Behav Res 46, 488–498 (2014). https://doi.org/10.3758/s13428-013-0391-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-013-0391-5