Abstract

The mirror neuron system (MNS) has been mooted as a crucial component underlying human social cognition. Initial evidence based on functional magnetic resonance imaging (fMRI) suggests that the MNS plays a role in emotion classification, but further confirmation and convergent evidence is needed. This study employed electroencephalography (EEG) to examine modulations in the mu rhythm associated with the inference of emotions from facial expressions. It was hypothesised that mu suppression would be associated with classifying the emotion portrayed by facial expressions. Nineteen participants viewed pictures of facial expressions or emotion words and were asked to either match the stimulus to an emotion word or to passively observe. Mu activity following stimulus presentation was localised using a 3-D distributed inverse solution, and compared between conditions. Subtractive logic was used to isolate the specific processes of interest. Comparisons of source localisation images between conditions revealed that there was mu suppression associated with recognising emotion from faces, thereby supporting our hypothesis. Further analyses confirmed that those effects were not due to activity associated with the motor response or the observation of facial expressions, offering further support for the hypotheses. This study provides important convergent evidence for the involvement of the MNS in the inference of emotion from facial expressions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Modulation of mirror neuron activity by inference of emotion from facial expressions

The mirror neuron system (MNS) is purported to comprise a network of neurons that respond both when one performs an action and when one observes performance of that same action (Rizzolatti & Craighero, 2004). Almost all direct evidence for mirror neurons stems from intracranial recordings of macaques (Pellegrino, Fadiga, Fogassi, Gallese, & Rizzolatti, 1992). Based on slightly less direct evidence, the MNS has been mooted as an important part of the system by which humans infer the intentions of others based on their actions (Gallese & Goldman, 1998).

It has been suggested that the activation of mirror neurons at premotor sites during action observation allows humans to ‘simulate’ the action in their own minds (Rizzolatti & Craighero, 2004). Neural networks associated with performing the observed action are activated in the observer, including networks associated with the state of mind that would result in the performance of that action. By this account, the ability of humans to attribute mental states to others (i.e., theory of mind) is inherent, and specialized subconscious neural circuits perform the bulk of the work (Gallese, 2009).

Activity in a human MNS has been inferred from functional magnetic resonance imaging (fMRI) results. Researchers have found action-observation congruent blood oxygen level-dependent (BOLD) activation in a complex network of areas distributed across the brain. This bilateral network is typically described as including the posterior part of the inferior parietal lobule (IPL), the lateral precentral gyrus (primary motor cortex), and the posterior section of the inferior frontal gyrus (Rizzolatti & Craighero, 2004). There is, however, evidence of mirror activity outside of these classically defined mirror neuron areas (Molenberghs, Cunnington, & Mattingley, 2009; Mukamel, Ekstrom, Kaplan, Iacoboni, & Fried, 2010).

The human MNS has also been studied using EEG. The current standard method of detecting MNS activity using EEG is to investigate mu wave activity at central electrodes. Mu is a subcategory of the alpha frequency band and is present during the absence of movement or somatosensory input (Kuhlman, 1978). Mu is typically recorded from the sensorimotor regions and encompasses the frequency range of approximately 7.5–11.5 Hz, although the precise range varies slightly across studies (e.g., Arroyo et al., 1993; Cochin, Barthelemy, Roux, & Martineau, 1999; Oberman, Ramachandran, & Pineda, 2008; Salenius, Schnitzler, Salmelin, Jousmäki, & Hari, 1997). Mu is also associated with activity in the beta range (Pfurtscheller & Lopes da Silva, 1999). One particular property of mu activity that has been interpreted as implicating involvement of the human MNS is its attenuation during action observation (Cochin, Barthelemy, Lejeune, Roux, & Martineau, 1998). This association has led to mu wave activity to be used as a proxy measurement for MNS function (Fu & Franz, 2014; Muthukumaraswamy & Johnson, 2004; Oberman et al., 2005). Studies combining fMRI and EEG recordings have indicated that EEG mu suppression is associated with patterns of the fMRI BOLD signal that are recognized as MNS activity (Arnstein, Cui, Keysers, Maurits, & Gazzola, 2011; Braadbaart, Williams, & Waiter, 2013).

Building from the original MNS experiments of grasping in macaques (Rizzolatti, Fadiga, Gallese, & Fogassi, 1996), both fMRI and EEG in human studies have demonstrated clear evidence for similar activity in humans (Iacoboni et al., 2005; Muthukumaraswamy, Johnson, & McNair, 2004). More recent studies have demonstrated the effects of facial stimuli on MNS activity. For example, Buccino and colleagues (2004) found fMRI evidence of MNS activation in response to ingestive and communicative facial actions.

The recognition that the MNS might provide a basis for how humans recognise the emotional content of facial expressions was made relatively early (Gallese & Goldman, 1998). It seems logical that a system that infers state of mind from actions could be brought to bear on facial expressions. The speed and automaticity with which facial expressions are typically processed (Dimberg, Thunberg, & Elmehed, 2000) is also consistent with the canonical function of the MNS. Furthermore, an MNS theory of emotion perception (and broader social cognition, for that matter) could parsimoniously explain some disorders of social functioning, such as autism spectrum disorder (ASD). Indeed, ASD has been found to be associated with atypical MNS function by studies using a variety of methodologies (Dapretto et al., 2006; Hadjikhani, Joseph, Snyder, & Tager-Flusberg, 2006; Oberman et al., 2005).

Evidence for MNS involvement in human emotion inference from facial expressions has been provided by a number of studies. Dapretto and colleagues (2006) used fMRI to investigate MNS activity in children (mean age 12 years), with a focus on ASD. Participants were shown images of facial expressions (anger, fear, happiness, sadness, plus neutral), and either imitated or observed. Fixation periods were included as a control. It was found that during both imitation and observation, neurotypical participants exhibited activation in areas associated with facial processing and the MNS; however, a comparison between the two conditions was not presented.

In another fMRI study, van der Gaag, Minderaa, and Keysers (2007) presented participants with videos of actors performing facial expressions associated with three emotions (happiness, disgust, and fear), or a neutral expression. Participants were asked to merely observe the expression for one block of trials, to match the expression to another in the following block, and to imitate the expression in the final block. It was found that simple observation of the facial expression video resulted in comprehensive activation of areas in the MNS that were also activated while the expression was imitated. These areas included the inferior frontal gyrus and sensorimotor areas. Furthermore, greater activation was found as the task became more active: imitation elicited the most MNS activation, and observation the least. Matching the expression to a picture of another expression evoked an intermediate amount of MNS activity.

Using EEG, Moore, Gorodnitsky, and Pineda (2012) investigated mu suppression during observation of facial expressions. EEG was recorded from participants while they viewed static images of facial expressions (happiness and disgust), buildings, or visual noise (as an additional control). Recordings from the visual noise condition were not analysed, as they included substantial noise from posterior alpha, likely due to differences in attentional demands between conditions. Independent components analysis (ICA) was applied to assess left and right mu components for each participant in the remaining conditions. The primary finding was that activity in the mu components was significantly less powerful when participants viewed facial expressions compared to when they viewed buildings, thereby providing evidence for MNS involvement in facial expression processing

Although studies have provided initial evidence that the MNS is involved in functions related to facial expression processing, no study has yet demonstrated mu suppression during the classification of emotion from facial expressions. Such a finding would provide important evidence for MNS involvement in emotion perception, convergent with the fMRI literature. In fact, a study by Pineda and Hecht (2009) did not find evidence of mu suppression during classification of emotion from images of eyes; thus, a study using classification of emotions from whole face expressions is necessary.

The present experiment employed the mu suppression method using EEG to test whether the MNS is involved in the classification of emotion from still images of emotional faces. Participants viewed images of a wide range of facial expressions and were instructed to either passively observe the expression or to match the associated emotion to one of four textually presented options. As a control task, emotion words were presented, and participants either observed them or matched them to the same word.

It was hypothesised that the matching task for both faces and words would evoke more mu suppression than the corresponding observation tasks due to, at least in part, the effect of the motor response. Furthermore, given that matching facial expressions to an emotion word would require participants to process the depicted emotion, it was hypothesised that more mu suppression would be involved during the emotion classification task in comparison to the simple matching task posed in the word condition. Such a result would indicate that MNS activity is elicited by the inference of emotions from facial expressions, especially if we assume that the cognitive process of matching a word does not particularly use the MNS.

Method

Participants

Nineteen volunteers (12 female, seven male) from the University of Otago community volunteered for the experiment. Participants were partially reimbursed for their time. There were 18 right-handed participants and one left-handed. Participants ranged in age from 18 to 25 years (M = 21.25 years) and had normal or corrected-to-normal vision and hearing. They were screened for mental health disorders and other conditions that might contraindicate EEG.

Materials

Hardware

Experiments were administered using two Windows XP computers. One computer presented the stimuli and recorded responses (the stimulus computer), and another recorded the participant’s electroencephalogram (the EEG computer). Participants were comfortably seated on a computer chair approximately 60 cm from the stimulus computer monitor. EEG was recorded in a safety-tested body-protected electrical area by a trained experimenter. Images from the stimulus computer were presented to the participant using an Asus VG248QE 61 cm (24-in.) WLED/TN monitor with 144 Hz refresh rate and advertised 1 ms grey-to-grey response time (ASUSTeK Computer Inc., 2014).

Responses were recorded using a custom-made input device (the response box) to obtain precise reaction times (in ms). It consisted of two identical ambidextrous computer mouses, each with left and right microswitches connected directly to an Arduino Uno microcontroller. Four options were presented; the participant responded with the middle and index fingers of both hands.

Triggers were sent from the stimulus computer to the EEG computer via a parallel port connection. EEG was recorded using a 32-channel Ag/AgCl sintered Advanced Neuro Technology (ANT) WaveGuard cap in the standard 10–20 configuration, connected to an ANT Refa8 32-channel amplifier. Conductive gel was used to couple the electrodes to the scalp, and impedance was kept below 5 kΩ at all electrodes. The ground electrode was located halfway between Fpz and Fz. EEG data were continuously recorded at 1024 Hz using ANT Advanced Source Analysis (ASA) software, Version 4.7.3.1.

Software

The experiment was presented by a program written in MATLAB using Psychtoolbox (Version 3.0.10; see Kleiner et al., 2007). This toolbox provides an interface between MATLAB and low-level graphics procedures, facilitating the high-precision presentation of visual stimuli. The experimenter monitored the participant using a live video feed and secondary monitors in a conjoining room.

Most processing of EEG data was performed using EEGLAB (Delorme & Makeig, 2004). EEGLAB is an open-source MATLAB toolbox for analysing and manipulating EEG data. Source analyses were undertaken using the exact low resolution electromagnetic tomography (eLORETA) function of the LORETA-KEY software package (Version 20150415), the latest development in the LORETA family of inverse solutions. This software was developed by the KEY Institute at the University of Zurich and is freely available online. Using eLORETA, the scalp EEG is assembled into a 29 × 34 × 24 matrix of cortical generators containing 6,239 5-mm3 voxels. Localisation by eLORETA is theoretically exact, albeit with low spatial resolution. Technical details can be found in Pascual-Marqui (2007). The eLORETA source localisation images presented in this article represent where differences in the generation of mu activity occurred.

Statistical comparisons between source localisations were undertaken using an implementation of statistical non-parametric modelling (SnPM) programmed in MATLAB. This software was created to combine some of the options in the eLORETA software with some of those of the SnPM13 toolbox for SPM12. The principles of SnPM are described in Nichols and Holmes (2002). Essentially, SnPM is a permutation-based approach that can be used to compare voxel images with minimal assumptions, while implicitly correcting for multiple comparisons.

Stimuli

Still images from the Amsterdam Dynamic Facial Expression Set (ADFES) were used. The ADFES is a validated (van der Schalk, Hawk, Fischer, & Doosje, 2011) stimulus set that contains both video and still images of 22 actors (10 female, 12 male) portraying facial expressions associated with nine emotions: anger, disgust, fear, joy, sadness, surprise, contempt, pride, and embarrassment, plus a neutral expression. The ADFES is freely available for academic use from the Psychology Research Unit at the University of Amsterdam. Our experiment used the full complement of 216 still images (four images are missing), presented at a size of 720 × 576 pixels. Names of the 10 expressions contained in the ADFES were used in control trials.

Procedure

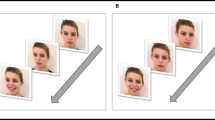

Written informed consent was first obtained. The experiment was approved by the University of Otago Human Ethics Committee and conducted in full conformance with the principles of the Declaration of Helsinki. The experiment was presented as a choice reaction time (RT) task. The basic task involved participants observing an image or word on each trial (depending on experimental condition: described below), and then either producing a four-choice RT response based on the expression depicted in the picture (or named by the word), or to merely observe the stimulus without responding. Participants were asked to respond as quickly and correctly as possible where responses were required. Thus, there were two key manipulations, referred to as trial task (observe vs. match), and stimulus medium (word vs. picture), resulting in four different experimental conditions that were manipulated within subjects: observe-text, observe-picture, match-text, and match-picture. The basic procedure is illustrated in Fig. 1.

Flow diagram of the procedure. (a) A 10-s fixation control period trial was presented at the start of each block of four trials. Each trial then started with a 4-s prompt cueing the participant to the trial task: either (b) the four options for a matching trial, or (c) a prompt to observe. After the prompt period, the stimulus appeared (the cue remained on screen). The stimulus was either (d) a picture or a (e) word, varying independently of the trial task. (f) After each trial (except for at the end of a block), a fixation cross was displayed for a period of randomly generated length (normally distributed, M = 3 s, SD = 0.75 s). Words are expanded in this figure to increase legibility

On each trial, the participant was first presented with the four response options (or a cue to observe) near the bottom of the screen (the prompt period). After 4 seconds, the stimulus appeared in the centre of the screen and remained for 6 seconds (the stimulus presentation period). The options were presented before the stimulus to familiarise participants with their content and locations and minimise the need for eye movements after stimulus presentation. During matching trials, participants responded using the response box. The participant’s response was then highlighted in blue on the display screen and was unable to be changed.

Trials and stimuli were fully randomised and presented in 15 four-trial blocks with a 10 second fixation period between each block. Consecutive trials were separated by a fixation period with a random duration averaging 3 seconds (SD = 0.75 s). Eight practice trials (two of each condition) were presented at the start of the experiment with instructions.

Analysis

EEG recordings from the stimulus presentation period were the primary focus of this study, but behavioural responses from valid trials were also analysed for RT and accuracy. The latter measures were averaged for each participant and compared using paired t tests. No distinction was made between correct and incorrect trials for these analyses.

EEG data were epoched between 250 and 1,000 ms after stimulus presentation, resulting in a 750-ms long epoch. The 250–1,000-ms poststimulus period was meant to capture only the early activity associated with perception and classification of the stimulus. The 250-ms start point was chosen to reduce the presence of eye-blink artefacts associated with stimulus presentation. The 1,000-ms end point was chosen because it occurred prior to the average response in both conditions and included enough of the period after stimulus presentation to capture the activity of interest.

EEG preprocessing

All EEG data were rereferenced to linked mastoid electrodes and downsampled to 512 Hz for analysis. The continuous data were then low-pass filtered at 40 Hz and high-pass filtered at 0.01 Hz. Continuous data were epoched, and the average voltage for each channel and epoch combination was subtracted as a baseline. An independent components analysis (ICA) was then performed (using the ‘extended’ option in EEGLAB). Epochs were then rejected on the basis of the ICA data. Trials with clear paroxysmal artefacts were rejected, as were trials with noise that was highly correlated across independent components (ICs; indicating a poor decomposition). Trials were also rejected if the response occurred before 812.5 ms poststimulus (i.e., three quarters of the way through the epoch, 250 + 750 × 0.75) to reduce the chances of capturing non-task-related activity and to eliminate erroneous or thoughtless responses. ICA was then performed again to obtain a cleaner decomposition, and artefactual ICs were subtracted from the EEG data with the help of the ADJUST plugin for EEGLAB (Mognon, Jovicich, Bruzzone, & Buiatti, 2011). Eliminated ICs were reflective of eye movements, blinks, neck and jaw muscle activity, and general noise.

Timestamps for events from the stimulus computer were algorithmically compared to those from the EEG computer. Minute adjustments were made to most of the EEG timestamps to account for variable delay associated with the transmission of the timestamp event. A faulty T7 electrode during one participant’s session necessitated the removal of that channel from that data set; data were interpolated from surrounding electrodes.

Source localization

In the past, mu suppression has been operationalised as average mu spectrum activity recorded at central electrodes during experimental conditions compared to a resting baseline fixation (e.g., Moore et al., 2012; Pineda & Hecht, 2009). The main disadvantage of this approach is that electrodes do not record activity exclusively from the brain directly below them (Michel & Murray, 2012). The potential impact of the attentionally moderated posterior alpha rhythm is of particular concern (Oberman et al., 2008). To mitigate these limitations, this experiment localised the sources of mu activity to ensure that only alpha of a sensorimotor origin was categorised as mu. Another departure from past methods was that the control task for this experiment was the observation of the stimulus (observe trials) rather than viewing of a fixation stimulus. The literature suggests that resting fixation is not an inherently neutral control task; an abundance of complex EEG activity occurs during rest (Barry, Clarke, Johnstone, Magee, & Rushby, 2007; Newman, Twieg, & Carpenter, 2001). Calculating mu suppression during the matching task relative to an observation task subtracted the potentially confounding effect of the participant observing the stimulus. Eliminating the resting baseline also simplified within-subjects comparisons while keeping them mathematically equivalent to the traditionally used approaches.

The analysis approach in this experiment began with the production of a three-dimensional array of mean spectral generators for each participant and each condition using the LORETA-KEY software package. This analysis followed the spectral analysis method. First, an eLORETA transformation matrix was derived for the standardised electrode locations of the ANT WaveGuard cap. Next, each participant’s recording was rereferenced to the average signal, and the mu (7.5–12.5 Hz) cross-spectrum was calculated at each electrode for valid epochs across each condition using the default LORETA method (i.e., a discrete Fourier transform with boxcar windowing; Chavanon, Wacker, & Stemmler, 2011), effectively decomposing the data to the frequency domain for the poststimulus period. Mu activity in the beta range was not investigated. Effects in the alpha band are usually stronger, and the beta band is regularly excluded from mu investigations (Horan, Pineda, Wynn, Iacoboni, & Green, 2014; Muthukumaraswamy & Johnson, 2004; Oberman et al., 2005; Singh, Pineda, & Cadenhead, 2011). The cross-spectra were localised using the transformation matrix, resulting in a frequency-space domain voxel image of mu activity for each condition, for each participant.

Source localisations were compared between conditions using statistical nonparametric mapping (SnPM). A double-subtraction analysis was used to test the main experimental hypothesis. Briefly: mu suppression during the matching task relative to the observation task was compared between picture and word trials. This procedure is, in essence, similar to the simple subtractive method often used in fMRI research (Brown, Perthen, Liu, & Buxton, 2007). First, SnPM was used to calculate the difference between the observe-picture and match-picture conditions, and between the observe-word and match-word conditions. The statistic of interest for these comparisons was the difference between the log transformed averaged images for each condition. These comparisons assessed the difference in mu activity associated with selecting an option separately in response to facial expressions and emotion words, and were called the observe-match ratio. Observe-match ratios were calculated for each participant as well as across all participants. This comparison effectively subtracted the effect on mu activity of observing the stimulus.

Because the observe-match ratios capture mu suppression related to both classification of the stimulus and the motor movement associated with selecting the option, the observe-match ratio images for the picture and word trials for each participant were compared using SnPM with a paired t test as the statistic of interest, initially restricted to MNS-related regions of interest (ROIs). This ROI was constructed using the LORETA-KEY software package and included bilaterally all voxels in the precentral and postcentral gyri, the pars opercularis of the inferior frontal gyri (Brodmann Area 44), and the inferior parietal lobule (illustrated in Fig. 2). A whole-brain analysis was also undertaken to assess whether alpha generation was affected outside of MNS regions. The t test comparing observe-match ratio images effectively subtracted the effect on mu activity of the button press.

Illustration of ROIs (for left, top, and right views) used for assessing differences in observe-match ratio between conditions. This ROI was generated by the LORETA software package and included (bilaterally) the pars opercularis of the inferior frontal gyrus (Brodmann Area 44), precentral and postcentral gyri, and the inferior parietal lobule

A further analysis was undertaken to investigate the potential for a confounding effect of motor response and/or motor readiness differing between picture- and word-matching trials. In short, the difference in average reaction times between match-picture and match-word was calculated for each participant. Additionally, a single eLORETA source localisation image representing the difference in observe-match ratio between picture and word trials was calculated for each participant (i.e., the individual comparisons that composed the average illustrated in Figs. 5 and 6). The difference in reaction time was then permuted between subject images and the maximum correlation from each permutation used to generate a probability distribution.

All SnPM comparisons were performed using 10,000 permutations. Each image was normalised by dividing all values by the mean for that image. For all t tests, the variance of each image was smoothed to mitigate the effect on the comparison of variance spikes associated with low degrees of freedom. This was accomplished by combining half the variance at each voxel with half the mean variance for the image (equivalent to a smoothing parameter value of 0.5 in the LORETA-KEY software package).

Results

Behavioural responses

Reaction times (RTs) for the emotion classification task were significantly longer (2.234 s vs. 1.289 s; p < .0001), and the percentage of correct responses was significantly lower (71.2 % vs. 90.9 %; p < .001) than the word-matching task. Although it was likely that accuracy in both tasks was degraded by the instruction to respond as quickly as possible, performance was well above chance (25 %) for both conditions. These behavioural data indicate that the picture-matching task was more difficult than the word-matching task, which was to be expected. During the picture-matching task the displayed emotion had to be perceived, then translated to a word, then matched to one of the options. Conversely, the word-matching task could have been accomplished purely by matching the visual aspects of the stimulus to one of the options (although, presumably, participants did read the word, given the automaticity of reading; Kahneman & Chajczyk, 1983). The epoching strategy used in this study aimed to capture only the early parts of this process. Additionally, MNS activity has often been analysed in the presence of differing RTs across conditions (e.g., Molnar-Szakacs, Kaplan, Greenfield, & Iacoboni, 2006; Perry, Troje, & Bentin, 2010).

Electrophysiological measures

Differences between observation and match trials per stimulus type

Differences in distribution of mu activity between observe and match trials during the 250–1,000-ms poststimulus period were compared separately for picture and word trials, resulting in two observe-match ratio images. As outlined in the Method section, these comparisons were made using SnPM with the mean log ratio between conditions as the statistic of interest. For both levels of stimulus medium, there was significant mu suppression during match trials relative to observe trials. Mu suppression associated with the matching task was more extensive in response to picture stimuli (illustrated in Fig. 3) compared to word stimuli (illustrated in Fig. 4).

Orthogonal slice view of areas of significant mu suppression (observe-match ratio) associated with the matching task in response to picture stimuli (one-tailed p < .05; contrast: picture-observe minus picture-match). The left hemisphere peak is at MNI coordinates: (-35, -30, 40); the right hemisphere peak is at MNI coordinates: (35, -30, 40)

Orthogonal slice view of areas of significant mu suppression (observe-match ratio) associated with the matching task in response to word stimuli (one-tailed p < .05; contrast: word-observe minus word-match). The left hemisphere peak is at MNI coordinates: (-35, -30, 40); the right hemisphere peak is at MNI coordinates: (35, -30, 40)

Peak mu suppression in both conditions was localised to the subgyral area just below the junction between the postcentral gyrus and parietal lobe in the left hemisphere (picture: f = 0.675, one-tailed p < .0001; word: f = 0.441, one-tailed p < .01). There was an equivalent maximum in the right hemisphere for both conditions that was somewhat weaker than that of the left hemisphere (picture: f = 0.620, one-tailed p < .001; word: f = 0.386, one-tailed p < .01).

Observe-match ratio differences between stimulus types

To assess the difference between the data presented in Figs. 3 and 4, the observe-match ratios for each participant were compared using SnPM with the paired t value as the statistic of interest, restricted bilaterally to the pars opercularis of the inferior frontal gyrus (Brodmann Area 44), precentral and postcentral gyri, and the inferior parietal lobule (see Fig. 2). This comparison effectively subtracted activity associated with the button press (assumed to be constant across the two experimental conditions). This double subtraction (illustrated in Fig. 5) highlights areas of significantly greater mu suppression associated with the emotion classification component of the picture-matching task compared to the word classification component of the word-matching task. There was significantly more mu suppression associated with the picture-matching task compared to that during the word-matching task.

Left hemisphere, top view, and right hemisphere perspectives showing significantly greater mu suppression following double subtraction method. Depicts areas of mu suppression associated with the emotion classification component of the picture-matching task conditions compared to word matching (one-tailed p < .05; contrast: [picture-observe minus picture-match] minus [word-observe minus word-match]). Peaks occurred in the left hemisphere at MNI coordinates: (50, -10, 55) and in the right hemisphere at MNI coordinates: (-45, -55, 40)

Peak mu suppression associated with the emotion classification component of the picture-matching task was localised to the right precentral gyrus (t = 3.55, one-tailed p < .02), and also encompassed some of the adjacent postcentral gyrus (illustrated in Fig. 6a). Another maximum of similar peak magnitude was localised to the left inferior parietal lobule (t = 3.54, one-tailed p < .02). This locus of mu suppression was more extensive, spreading through the postcentral gyrus, and into the precentral gyrus (illustrated in Fig. 6b). Expanding the analysis to the whole brain indicated no additional areas of significant alpha suppression differences.

Orthogonal slice view of (a) left hemisphere and (b) right hemisphere areas of significantly greater mu suppression associated with the emotion classification component of the picture matching task compared to word matching (one-tailed p < .05; contrast: [picture-observe minus picture-match] minus [word-observe minus word-match]). Peak difference in the left hemisphere occurred at MNI coordinates: (50, -10, 55) and in the right hemisphere at MNI coordinates: (-45, -55, 40)

Correlation between reaction time differences and difference in observe-match ratio

The difference in observe-match ratio between picture and word trials (i.e., Figs. 5 and 6) was found not to be significantly correlated with the difference in RT between picture- and word-matching trials. The peak correlation (r = .23, two-tailed p > .85) occurred in the superior frontal gyrus, and the correlation coefficient did not exceed 0.2 at any voxel in our regions of interest.

Discussion

These findings support both the first hypothesis that mu suppression would be associated with the matching task, and the second hypothesis that the suppression would be more powerful when matching facial expressions to emotion words than when merely matching words. This demonstration that mu suppression is associated with classification of emotions from facial expressions is consistent with the theory that mu suppression reflects MNS activity, and that the MNS is employed in emotion processing. Thus, the study provides new evidence for the role of the MNS in emotion perception based on facial expressions, and complements previous work using both fMRI and EEG.

This study also further clarifies the fMRI research which provided its foundation. Using fMRI, van der Gaag and colleagues (2007) found more activity in MNS areas during facial expression classification compared to observation. This study provides corroborating evidence while using double subtraction to control for the potential effect on MNS activity associated with both the motor action involved in selecting a response option and the observation of the facial expression. Specifically, the subtraction of activity during the matching task from that during observation (the observe-match ratio) theoretically eliminated mu suppression associated with observation of the stimulus (either facial expression or word). The comparison between those two difference images then theoretically eliminated the effect of selecting a response option. Thus, the final comparison reflects the difference between classifying an emotion and matching a word. It is perhaps fair to assume that matching a word purely on its visual characteristics should not elicit MNS activity, and that the final contrast image only shows the mu suppression associated with classifying an emotion from a facial expression.

This study also supplements existing fMRI research by providing important temporal information. Our analyses isolated a short period during the early stages of emotion processing with a temporal resolution that would be difficult to achieve with metabolic neuroimaging techniques.

The subtractive method used in this study is not without its limitations. As Friston and colleagues (1996) note, the pure subtraction of cognitive processes may produce erroneous conclusions where interactions are present. For instance, there exists the risk that the matching task of this study focused attention on the stimulus, thereby eliciting more mu suppression from observing that stimulus during matching compared to passive observation. Nevertheless, the subtraction approach of this study provided more control than the electrode-wise comparisons typically used in mu suppression experiments and allowed for the simple application of relatively assumption-free permutation-based comparisons.

Another strength of this study is that the sources of mu activity were localised, rather than relying on mu activity as recorded at central electrodes. This mitigated the potential problem that central electrodes do not reliably record activity from only central areas. Localising the sources of alpha activity allows the more definitive identification of the mu rhythm, especially in terms of differentiating mu from the posterior alpha rhythm. The absence of posterior alpha differences between conditions found by this inherent coanalysis suggests that there were not strong attentional differences between conditions. It is the hope that this localisation will eventually be improved by the use of a denser electrode array. Simulated data have shown that denser arrays, especially on the inferior head areas, can improve the localisation properties of sLORETA (the precursor to eLORETA). Nevertheless, we could reasonably expect average localisation error on the order of 1 cm (i.e., two voxels) with a 32-electrode setup (Song et al., 2015). Moreover, similar equipment has been used to successfully localise activity in previous studies (Costa et al., 2013).

It should be noted that although eLORETA generates images visually similar to those of metabolic techniques, the basis of the value at each voxel differs. Source localisation images represent areas of large-scale oscillatory activity, which does not necessarily coincide with the BOLD signal. For instance, we would not expect to localise alpha activity differences to face areas; such a finding would not be considered mu suppression and could not be interpreted as MNS activity. The eLORETA images are best viewed as a more precise version of the scalp maps of spectral activity often seen in EEG research.

A potential methodological limitation of this study is that the reaction time (RT) for the word matching was significantly faster than that of the emotion classification task. This would have made it more likely that the button press (or associated motor planning) would occur during the 250–1,000-ms poststimulus epoch during word matching trials. Mu suppression associated with motor planning and movement is well-documented (Pfurtscheller, Brunner, Schlögl, & Lopes da Silva, 2006).

It is fair to assume that the effect of response-associated mu suppression on our analyses was not inordinate for several reasons. First, we would expect more response-associated mu suppression during the word-matching trials due to the epochs containing more responses on average, yet our findings are in the opposite direction. Second, our stimulus-locked epochs were for a brief enough period after the stimulus that they did not often encompass the response, even during the word-matching task. Thus, it is likely that most of the activity during that epoch was associated with the more instantaneous perceptual processes that we were interested in. Perhaps most tellingly, the results from our supplementary analysis indicate that the difference in observe-match ratio between picture and word conditions was not significantly correlated with the difference in RT between the two conditions at any voxel. Nevertheless, future studies might do well to investigate differences between tasks with more closely matched tasks. The difficulty of a word-matching control task could be increased by visually obfuscating the stimulus to some degree, or a different control task could be created using an image processing task thought not to engage the MNS.

Although research on the MNS originally focused on its association with hand actions, this study provides further support that the MNS is intimately involved in the processing (and classification) of emotion. This supports the notion that the MNS plays a crucial role in social cognition, as proposed by Gallese and Goldman (1998), and echoed by others (Oberman & Ramachandran, 2007). The occurrence of mu suppression in the very early stages poststimulus indicates that engagement of the MNS, when processing facial expressions occurs rapidly and likely automatically upon perception of the faces.

In sum, the this study provides electrophysiological support for the idea that the MNS is involved in social perception. Mu suppression was demonstrated using a novel combination of techniques that go some way to control for potential confounding factors that can intrude on MNS research in general, and EEG research in particular. The findings presented here are consistent with the idea that the development of the MNS has played a crucial role in our social functioning.

References

Arnstein, D., Cui, F., Keysers, C., Maurits, N. M., & Gazzola, V. (2011). μ-suppression during action observation and execution correlates with BOLD in dorsal premotor, inferior parietal, and SI cortices. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 31(40), 14243–14249. doi:10.1523/JNEUROSCI.0963-11.2011

Arroyo, S., Lesser, R. P., Gordon, B., Uematsu, S., Jackson, D., & Webber, R. (1993). Functional significance of the mu rhythm of human cortex: An electrophysiologic study with subdural electrodes. Electroencephalography and Clinical Neurophysiology, 87(3), 76–87.

ASUSTeK Computer Inc. (2014). VG248QE specifications. Retrieved from http://www.asus.com/nz/Monitors_Projectors/VG248QE/specifications/

Barry, R. J., Clarke, A. R., Johnstone, S. J., Magee, C. A., & Rushby, J. A. (2007). EEG differences between eyes-closed and eyes-open resting conditions. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology, 118(12), 2765–2773. doi:10.1016/j.clinph.2007.07.028

Braadbaart, L., Williams, J. H. G., & Waiter, G. D. (2013). Do mirror neuron areas mediate mu rhythm suppression during imitation and action observation? International Journal of Psychophysiology: Official Journal of the International Organization of Psychophysiology, 89(1), 99–105. doi:10.1016/j.ijpsycho.2013.05.019

Brown, G. G., Perthen, J. E., Liu, T. T., & Buxton, R. B. (2007). A primer on functional magnetic resonance imaging. Neuropsychology Review, 17(2), 107–125.

Buccino, G., Lui, F., Canessa, N., Patteri, I., Lagravinese, G., Benuzzi, F., & Rizzolatti, G. (2004). Neural circuits involved in the recognition of actions performed by nonconspecifics: An fMRI study. Journal of Cognitive Neuroscience, 16(1), 114–126.

Chavanon, M.-L., Wacker, J., & Stemmler, G. (2011). Rostral anterior cingulate activity generates posterior versus anterior theta activity linked to agentic extraversion. Cognitive, Affective, & Behavioral Neuroscience, 11(2), 172–185.

Cochin, S., Barthelemy, C., Lejeune, B., Roux, S., & Martineau, J. (1998). Perception of motion and qEEG activity in human adults. Electroencephalography and Clinical Neurophysiology, 107(4), 287–295.

Cochin, S., Barthelemy, C., Roux, S., & Martineau, J. (1999). Observation and execution of movement: Similarities demonstrated by quantified electroencephalography. The European Journal of Neuroscience, 11(5), 1839.

Costa, T., Cauda, F., Crini, M., Tatu, M.-K., Celeghin, A., de Gelder, B., & Tamietto, M. (2013). Temporal and spatial neural dynamics in the perception of basic emotions from complex scenes. Social Cognitive and Affective Neuroscience. doi:10.1093/scan/nst164

Dapretto, M., Davies, M. S., Pfeifer, J. H., Scott, A. A., Sigman, M., Bookheimer, S. Y., & Iacoboni, M. (2006). Understanding emotions in others: Mirror neuron dysfunction in children with autism spectrum disorders. Nature Neuroscience, 9(1), 28–30. doi:10.1038/nn1611

Delorme, A., & Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21.

Dimberg, U., Thunberg, M., & Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychological Science, 11(1), 86–89.

Friston, K. J., Price, C. J., Fletcher, P., Moore, C., Frackowiak, R. S. J., & Dolan, R. J. (1996). The trouble with cognitive subtraction. NeuroImage, 4(2), 97–104.

Fu, Y., & Franz, E. (2014). Viewer perspective in the mirroring of actions. Experimental Brain Research, 232(11), 3665–3674.

Gallese, V. (2009). Mirror neurons, embodied simulation, and the neural basis of social identification. Psychoanalytic Dialogues, 19(5), 519–536.

Gallese, V., & Goldman, A. (1998). Mirror neurons and the simulation theory of mind-reading. Trends in Cognitive Sciences, 2(12), 493–501.

Hadjikhani, N., Joseph, R. M., Snyder, J., & Tager-Flusberg, H. (2006). Anatomical differences in the mirror neuron system and social cognition network in autism. Cerebral Cortex, 16(9), 1276–1282.

Horan, W. P. W., Pineda, J., Wynn, J., Iacoboni, M., & Green, M. (2014). Some markers of mirroring appear intact in schizophrenia: Evidence from mu suppression. Cognitive, Affective, & Behavioral Neuroscience, 14(3), 1049–1060. doi:10.3758/s13415-013-0245-8

Iacoboni, M., Molnar-Szakacs, I., Gallese, V., Buccino, G., Mazziotta, J. C., & Rizzolatti, G. (2005). Grasping the intentions of others with one’s own mirror neuron system. PLoS Biology, 3(3), e79.

Kahneman, D., & Chajczyk, D. (1983). Tests of the automaticity of reading: Dilution of Stroop effects by color-irrelevant stimuli. Journal of Experimental Psychology: Human Perception and Performance, 9(4), 497.

Kleiner, M., Brainard, D., Pelli, D., Ingling, A., Murray, R., & Broussard, C. (2007). What’s new in Psychtoolbox-3. Perception, 36(14), 1–16.

Kuhlman, W. N. (1978). Functional topography of the human mu rhythm. Electroencephalography and Clinical Neurophysiology, 44(1), 83–93.

Michel, C. M., & Murray, M. M. (2012). Towards the utilization of EEG as a brain imaging tool. NeuroImage, 61(2), 371–385.

Mognon, A., Jovicich, J., Bruzzone, L., & Buiatti, M. (2011). ADJUST: An automatic EEG artifact detector based on the joint use of spatial and temporal features. Psychophysiology, 48(2), 229–240.

Molenberghs, P., Cunnington, R., & Mattingley, J. B. (2009). Is the mirror neuron system involved in imitation? A short review and meta-analysis. Neuroscience & Biobehavioral Reviews, 33(7), 975–980.

Molnar-Szakacs, I., Kaplan, J., Greenfield, P. M., & Iacoboni, M. (2006). Observing complex action sequences: The role of the fronto-parietal mirror neuron system. NeuroImage, 33(3), 923–935.

Moore, A., Gorodnitsky, I., & Pineda, J. (2012). EEG mu component responses to viewing emotional faces. Behavioural Brain Research, 226(1), 309–316. Retrieved from http://cognitiveneurolab.ucsd.edu/Papers_files/Mooreet al.,2012.pdf

Mukamel, R., Ekstrom, A. D., Kaplan, J., Iacoboni, M., & Fried, I. (2010). Single-neuron responses in humans during execution and observation of actions. Current Biology: CB, 20(8), 750–756. doi:10.1016/j.cub.2010.02.045

Muthukumaraswamy, S. D., & Johnson, B. W. (2004). Primary motor cortex activation during action observation revealed by wavelet analysis of the EEG. Clinical Neurophysiology, 115(8), 1760–1766.

Muthukumaraswamy, S. D., Johnson, B. W., & McNair, N. A. (2004). Mu rhythm modulation during observation of an object-directed grasp. Cognitive Brain Research, 19(2), 195–201.

Newman, S. D., Twieg, D. B., & Carpenter, P. A. (2001). Baseline conditions and subtractive logic in neuroimaging. Human Brain Mapping, 14(4), 228–235.

Nichols, T. E., & Holmes, A. P. (2002). Nonparametric permutation tests for functional neuroimaging: A primer with examples. Human Brain Mapping, 15(1), 1–25.

Oberman, L. M., Hubbard, E. M., McCleery, J. P., Altschuler, E. L., Ramachandran, V. S., & Pineda, J. A. (2005). EEG evidence for mirror neuron dysfunction in autism spectrum disorders. Cognitive Brain Research, 24(2), 190–198.

Oberman, L. M., & Ramachandran, V. S. (2007). The simulating social mind: the role of the mirror neuron system and simulation in the social and communicative deficits of autism spectrum disorders. Psychological Bulletin, 133(2), 310.

Oberman, L. M., Ramachandran, V. S., & Pineda, J. A. (2008). Modulation of mu suppression in children with autism spectrum disorders in response to familiar or unfamiliar stimuli: The mirror neuron hypothesis. Neuropsychologia, 46(5), 1558–1565.

Pascual-Marqui, R. D. (2007). Discrete, 3D distributed, linear imaging methods of electric neuronal activity. Part 1: Exact, zero error localization. Advance online publication. arXiv:0710.3341.

Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V., & Rizzolatti, G. (1992). Understanding motor events: A neurophysiological study. Experimental Brain Research, 91(1), 176–180.

Perry, A., Troje, N. F., & Bentin, S. (2010). Exploring motor system contributions to the perception of social information: Evidence from EEG activity in the mu/alpha frequency range. Social Neuroscience, 5(3), 272–284.

Pfurtscheller, G., Brunner, C., Schlögl, A., & Lopes da Silva, F. H. (2006). Mu rhythm (de)synchronization and EEG single-trial classification of different motor imagery tasks. NeuroImage, 31(1), 153–159. doi:10.1016/j.neuroimage.2005.12.003

Pfurtscheller, G., & Lopes da Silva, F. H. (1999). Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clinical Neurophysiology, 110(11), 1842–1857.

Pineda, J. A., & Hecht, E. (2009). Mirroring and mu rhythm involvement in social cognition: Are there dissociable subcomponents of theory of mind? Biological Psychology, 80(3), 306–314.

Rizzolatti, G., & Craighero, L. (2004). The mirror-neuron system. Annual Review of Neuroscience, 27, 169–192.

Rizzolatti, G., Fadiga, L., Gallese, V., & Fogassi, L. (1996). Premotor cortex and the recognition of motor actions. Cognitive Brain Research, 3(2), 131–141.

Salenius, S., Schnitzler, A., Salmelin, R., Jousmäki, V., & Hari, R. (1997). Modulation of human cortical Rolandic rhythms during natural sensorimotor tasks. NeuroImage, 5(3), 221–228.

Singh, F., Pineda, J., & Cadenhead, K. S. (2011). Association of impaired EEG mu wave suppression, negative symptoms and social functioning in biological motion processing in first episode of psychosis. Schizophrenia Research, 130(1), 182–186.

Song, J., Davey, C., Poulsen, C., Luu, P., Turovets, S., Anderson, E., & Tucker, D. (2015). EEG source localization: Sensor density and head surface coverage. Journal of Neuroscience Methods, 256, 9–21. doi:10.1016/j.jneumeth.2015.08.015

van der Gaag, C., Minderaa, R. B., & Keysers, C. (2007). Facial expressions: What the mirror neuron system can and cannot tell us. Social Neuroscience, 2(3/4), 179–222. doi:10.1080/17470910701376878

van der Schalk, J., Hawk, S., Fischer, A., & Doosje, B. (2011). Moving faces, looking places: Validation of the Amsterdam Dynamic Facial Expression Set (ADFES). Emotion, 11(4), 907.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Funding

This project was funded in part by the University of Otago.

Rights and permissions

About this article

Cite this article

Moore, M.R., Franz, E.A. Mu rhythm suppression is associated with the classification of emotion in faces. Cogn Affect Behav Neurosci 17, 224–234 (2017). https://doi.org/10.3758/s13415-016-0476-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-016-0476-6