Abstract

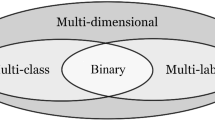

In cases of uncertainty, a multi-class classifier preferably returns a set of candidate classes instead of predicting a single class label with little guarantee. More precisely, the classifier should strive for an optimal balance between the correctness (the true class is among the candidates) and the precision (the candidates are not too many) of its prediction. We formalize this problem within a general decision-theoretic framework that unifies most of the existing work in this area. In this framework, uncertainty is quantified in terms of conditional class probabilities, and the quality of a predicted set is measured in terms of a utility function. We then address the problem of finding the Bayes-optimal prediction, i.e., the subset of class labels with the highest expected utility. For this problem, which is computationally challenging as there are exponentially (in the number of classes) many predictions to choose from, we propose efficient algorithms that can be applied to a broad family of utility functions. Our theoretical results are complemented by experimental studies, in which we analyze the proposed algorithms in terms of predictive accuracy and runtime efficiency.

Similar content being viewed by others

Notes

Practically, this augmentation is often omitted for simplicity.

Note that the candidate solutions in the set are sorted in decreasing order of conditional class probability.

The multi-label VOC datasets are transformed to multi-class by removing instances with more than one label.

LIBSVM datasets collection https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/.

References

Babbar R, Dembczyński K (2018) Extreme classification for information retrieval. Tutorial at ECIR 2018, http://www.cs.put.poznan.pl/kdembczynski/xmlc-tutorial-ecir-2018/xmlc4ir-2018.pdf

Babbar R, Schölkopf B (2017) Dismec: Distributed sparse machines for extreme multi-label classification. Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, DOI 10(1145/3018661):3018741

Balasubramanian V, Ho S, Vovk V (eds) (2014) Conformal Prediction for Reliable Machine Learning: Theory. Morgan Kaufmann, Adaptations and Applications

Beygelzimer A, Langford J, Lifshits Y, Sorkin G, Strehl A (2009) Conditional probability tree estimation analysis and algorithms. In: Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence, AUAI Press, Arlington, Virginia, United States, UAI ’09, pp 51–58

Bi W, Kwok J (2015) Bayes-optimal hierarchical multilabel classification. IEEE Trans Knowl Data Eng 27:1–1

Corani G, Zaffalon M (2008) Learning reliable classifiers from small or incomplete data sets: the naive credal classifier 2. J Mach Learn Res 9:581–621

Corani G, Zaffalon M (2009) Lazy naive credal classifier. In: Proceedings of the 1st ACM SIGKDD Workshop on Knowledge Discovery from Uncertain Data, ACM, pp 30–37

Del Coz JJ, Díez J, Bahamonde A (2009) Learning nondeterministic classifiers. J Mach Learn Res 10:2273–2293

Dembczyński K, Waegeman W, Cheng W, Hüllermeier E (2012) An analysis of chaining in multi-label classification. In: Proceedings of the European Conference on Artificial Intelligence

Dembczyński K, Kotłowski W, Waegeman W, Busa-Fekete R, Hüllermeier E (2016) Consistency of probabilistic classifier trees. In: ECML/PKDD

Denis C, Hebiri M (2017) Confidence sets with expected sizes for multiclass classification. J Mach Learn Res 18:102–128

Depeweg S, Hernández-Lobato JM, Doshi-Velez F, Udluft S (2018) Decomposition of uncertainty in Bayesian deep learning for efficient and risk-sensitive learning. ICML, PMLR, Proceedings of Machine Learning Research 80:1192–1201

Everingham M, Eslami ASM, Gool LV, Williams CKI, Winn J, Zisserman A (2006) The pascal visual object classes challenge 2006 (VOC2006) results. Int J comput vision 111(1):98–136

Everingham M, Gool LV, Williams CKI, Winn J, Zisserman A (2007) The PASCAL visual object classes challenge 2007 (VOC2007) results

Fan RE, Chang KW, Hsieh CJ, Wang XR, Lin CJ (2008) LIBLINEAR: a library for large linear classification. J Mach Learn Res 9:1871–1874

Fiannaca A, Paglia LL, Rosa ML, Bosco GL, Renda G, Rizzo R, Gaglio S, Urso A (2018) Deep learning models for bacteria taxonomic classification of metagenomic data. BMC Bioinformat 19:61–76

Fox J (1997) Applied regression analysis, linear models, and related methods. Sage,

Frank E, Kramer S (2004) Ensembles of nested dichotomies for multi-class problems. In: Proceedings of the Twenty-first International Conference on Machine Learning, ACM, New York, NY, USA, ICML ’04, pp 39

Freitas A (2007) A tutorial on hierarchical classification with applications in bioinformatics. In: Research and Trends in Data Mining Technologies and Applications,, pp 175–208

Geusebroek JM, Burghouts G, Smeulders A (2005) The amsterdam library of object images. Int J Comput Vision 61(1):103–112

Griffin G, Holub A, Perona P (2007) Caltech-256 object category dataset. Tech Rep 7694, California Institute of Technology

Hüllermeier E, Waegeman W (2019) Aleatoric and epistemic uncertainty in machine learning: A tutorial introduction. arXiv:1910.09457

Jansche M (2007) A maximum expected utility framework for binary sequence labeling. In: Association for Computational Linguistics, pp 736–743

Johnson J, Douze M, Jégou H (2017) Billion-scale similarity search with gpus. arXiv preprint arXiv:1702.08734

Kendall A, Gal Y (2017) What uncertainties do we need in Bayesian deep learning for computer vision? Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, 4–9 December 2017. Long Beach, CA, USA, pp 5580–5590

LeCun Y, Cortes C (2010) MNIST handwritten digit database. Tech rep Courant Institute, Google Labs, http://yann.lecun.com/exdb/mnist/

Li FF, Andreetto M, Ranzato MA (2003) Caltech101 image dataset. Tech. rep, California Institute of Technology

Li Y, Wang S, Umarov R, Xie B, Fan M, Li L, Gao X (2018) Deepre: sequence-based enzyme EC number prediction by deep learning. Bioinformatics 34(5):760–769

Malkov YA, Yashunin DA (2018) Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. IEEE Transactions on Pattern Analysis and Machine Intelligence pp 1–1

Melnikov V, Hüllermeier E (2018) On the effectiveness of heuristics for learning nested dichotomies: an empirical analysis. Mach Learn 107(8–10):1537–1560

Mena D, Montañés E, Quevedo JR, del Coz JJ (2017) A family of admissible heuristics for A* to perform inference in probabilistic classifier chains. Mach Learn 106(1):143–169

Mikolov T, Sutskever I, Chen K, Corrado GS, Dean J (2013) Distributed representations of words and phrases and their compositionality. In: Burges CJC, Bottou L, Welling M, Ghahramani Z, Weinberger KQ (eds) Advances in Neural Information Processing Systems 26, Curran Associates, Inc., pp 3111–3119

Morin F, Bengio Y (2005) Hierarchical probabilistic neural network language model. In: Proceedings of the Tenth International Workshop on Artificial Intelligence and Statistics, Society for Artificial Intelligence and Statistics, pp 246–252

Naidan B, Boytsov L (2015) Non-metric space library manual. CoRR arXiv:1508.05470

Navarro G (2002) Searching in metric spaces by spatial approximation. VLDB J 11(1):28–46. https://doi.org/10.1007/s007780200060

Nguyen V, Destercke S, Masson M, Hüllermeier E (2018) Reliable multi-class classification based on pairwise epistemic and aleatoric uncertainty. In: IJCAI, ijcai.org, pp 5089–5095

Ofer D (2019) Dbpedia classes. https://www.kaggle.com/danofer/dbpedia-classes/metadata

Oh S (2017) Top-k hierarchical classification. In: AAAI, AAAI Press, pp 2450–2456

Papadopoulos H (2008) Inductive conformal prediction: theory and application to neural networks. Tools Artif Intel 18(2):315–330

Partalas I, Kosmopoulos A, Baskiotis N, Artières T, Paliouras G, Gaussier É, Androutsopoulos I, Amini M, Gallinari P (2015) LSHTC: A benchmark for large-scale text classification. CoRR arXiv:1503.08581

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in pytorch. In: NIPS-W

Prabhu Y, Varma M (2014) Fastxml: A fast, accurate and stable tree-classifier for extreme multi-label learning. In: KDD

Prabhu Y, Kag A, Harsola S, Agrawal R, Varma M (2018) Parabel: Partitioned label trees for extreme classification with application to dynamic search advertising. In: Proceedings of the International World Wide Web Conference

Rahimi A, Recht B (2008) Random features for large-scale kernel machines. Adv Neural Inform Process Syst 20:1177–1184

Ramaswamy HG, Tewari A, Agarwal S (2015) Consistent algorithms for multiclass classification with a reject option. CoRR arXiv:5050.4137

Rangwala H, Naik A (2017) Large scale hierarchical classification: foundations, algorithms and applications. In: The European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases

RIKEN (2013) Genomic-based 16s ribosomal rna database. https://metasystems.riken.jp/grd/download.html

Senge R, Bösner S, Dembczyénski K, Haasenritter J, Hirsch O, Donner-Banzhoff N, Hüllermeier E (2014) Reliable classification: Learning classifiers that distinguish aleatoric and epistemic uncertainty. Inf Sci 255:16–29

Shafer G, Vovk V (2008) A tutorial on conformal prediction. J Mach Learn Res 9:371–421

Shrivastava A, Li P (2014) Asymmetric lsh (alsh) for sublinear time maximum inner product search (mips). In: Proceedings of the 27th International Conference on Neural Information Processing Systems - Volume 2, MIT Press, Cambridge, MA, USA, NIPS’14, pp 2321–2329

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Stock M, Dembczynski K, Baets BD, Waegeman W (2016) Exact and efficient top-k inference for multi-target prediction by querying separable linear relational models. Data Min Knowl Discov 30(5):1370–1394. https://doi.org/10.1007/s10618-016-0456-z

Syed S (2016) Submodularity in machine learning. MLRG Summer School, https://www.stat.ubc.ca/~saif.syed/papers/mlrg_submodularity.pdf

Vondrak J (2019) Optimization of submodular functions tutorial. https://theory.stanford.edu/~jvondrak/data/submod-tutorial-1.pdf

Vovk V, Gammerman A, Shafer G (2003) Algorithmic Learning in a Random World. Springer-Verlag,

Waegeman W, Dembczyński K, Jachnik A, Cheng W, Hüllermeier E (2014) On the Bayes-optimality of F-measure maximizers. J Mach Learn Res 15:3333–3388

Yagnik J, Strelow D, Ross DA, sung Lin R (2011) The power of comparative reasoning. In: 2011 International Conference on Computer Vision, pp 2431–2438

Yang G, Destercke S, Masson MH (2017a) Cautious classification with nested dichotomies and imprecise probabilities. Soft Comput 21:7447–7462

Yang G, Destercke S, Masson MH (2017b) The costs of indeterminacy: how to determine them? IEEE Transact Cybernet 47:4316–4327

Ye N, Chai K, Lee WS, Chieu HL (2012) Optimizing f-measures: a tale of two approaches. In: Proceedings of the International Conference on Machine Learning

Zaffalon M, Giorgio C, Mauá DD (2012) Evaluating credal classifiers by utility-discounted predictive accuracy. Int J Approx Reasoning 53:1282–1301

Ziyin L, Wang Z, Liang PP, Salakhutdinov R, Morency LP, Ueda M (2019) Deep gamblers: Learning to abstain with portfolio theory. arXiv:1907.00208

Acknowledgements

For this work Willem Waegeman received funding from the Flemish Government under the “Onderzoeksprogramma Artificiële Intelligentie (AI) Vlaanderen” Programme.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Ira Assent, Carlotta Domeniconi, Aristides Gionis.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Regret bounds for the utility functions

In this part we present a short theoretical analysis that relates the Bayes optimal solution for the set-based utility functions to the solution obtained on the probabilities given by a trained model. The goal is to upper bound the regret of the set-based utility functions by the \(L_1\) error of the class probability estimates. The analysis is performed on the level of a single \({\varvec{x}}\).

Let \({\hat{P}}({\varvec{x}})\) be the estimate of the true underlying distribution \(P({\varvec{x}})\). Let \(U^*(P, u)\) denote the optimal utility for P obtained by the optimal solution \(\hat{Y}^*\) (this solution does not have to be unique). Now, let \(\hat{Y}\) denote the optimal solution with respect to \({\hat{P}}({\varvec{x}})\). We define the regret of \(\hat{Y}\) as:

We bound \(\mathrm {reg}_u({\hat{P}}({\varvec{x}}))\) in terms of the \(L_1\)-estimation error, i.e.:

Note that if \(\hat{Y}^* = \hat{Y}\) the regret is 0. Otherwise, we need to have

Thus, we can write

The inequality in (14) follows from the properties of the absolute function, \(a \le |a|\), while the one in (15) holds because the utility functions are from the bounded interval, \(u(\cdot ,\cdot ) \in [0,1]\). We clearly see that the regret is upper bounded by the quality of the estimated probability distribution.

Generalized reject option utility and parameter bounds

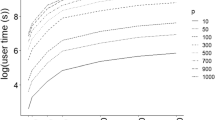

In this part we analyze which values \(\alpha \) and \(\beta \) can take so that the \(g_{\alpha ,\beta }\) family is lower bounded by precision. This family is visualized in Fig. 3. For a given K, the following inequality must hold \(\forall s\in \{1,\ldots ,K\}\), such that \(g_{\alpha ,\beta }(s)\) is lower bounded by precision:

with utilities:

When looking at the boundary cases (i.e., \(s=1, s=K\)), we find that:

By fixing \(\alpha =\frac{K-1}{K}\), the above inequality can be rewritten, \(\forall s\in \{2,\ldots ,K-1\}\), as:

Note that in the limit, when \(K\rightarrow \infty \), we obtain the following upper and lower bound for \(\alpha \) and \(\beta \), respectively:

Experimental setup

For all image datasets, except ALOI.BIN, we use hidden representations obtained by convolutional neural networks, whereas for the text datasets (bottom part of Table 2) tf-idf representations are used. The dimensionality of the representations are denoted by D. For the MNIST dataset we use a convolutional neural network with three consecutive convolutional, batchnorm and max-pool layers, followed by a fully connected dense layer with 32 hidden units. We use ReLU activation functions and optimize the categorical cross-entropy loss by means of Adam optimization with learning rate \(\eta = 1e-3\). For the VOC 2006,Footnote 3 VOC 2007,\({}^{3}\) Caltech-101 and Caltech-256, the hidden representations are obtained by resizing images to 224x224 pixels and passing them through the convolutional part of an entire VGG16 architecture, including a max-pooling operation (Simonyan and Zisserman 2014). The weights are set to those obtained by training the network on ImageNet. For all convolutional neural networks, the number of epochs are set to 100 and early stopping is applied with a patience of five iterations. For ALOI.BIN, we use the ALOIFootnote 4 dataset with precalculated random binning features (Rahimi and Recht 2008). Training is performed end-to-end on a GPU, by using the PyTorch library (Paszke et al. 2017) and infrastructure with the following specifications:

-

CPU: i7-6800K 3.4 GHz (3.8 GHz Turbo Boost) – 6 cores / 12 threads.

-

GPU: 2x Nvidia GTX 1080 Ti 11GB + 1x Nvidia Tesla K40c 11GB.

-

RAM: 64GB DDR4-2666.

For the bacteria dataset, tf-idf representations are calculated by using 3-, 4-, and 5-grams extracted from each 16S rRNA sequence in the dataset (Fiannaca et al. 2018). For the proteins dataset, we consider 3-grams in order to calculate the tf-idf representation for each protein sequence. To comply with literature, we concatenate the tf-idf representations with functional domain encoding vectors, which provide distinct functional and evolutional information about the protein sequence. For more information about the functional domain encodings, we refer the reader to (Li et al. 2018). Precalculated tf-idf representations were provided with the DMOZ and LSHTC1 dataset.Footnote 5

Finally, we use the learned hidden representations for the image datasets and calculate tf-idf representations for the text datasets to train the probabilistic models using a dual L2-regularized logistic regression model. For the DMOZ and LSHTC1 dataset we enforce sparsity by clipping all the learned weights less than a threshold \(\eta = 0.1\) to zero (Babbar and Schölkopf 2017). We implemented all SVBOP algorithms in C++ using the LIBLINEAR library (Fan et al. 2008) and H-NSW implementation from NMSLIB (Naidan and Boytsov 2015). All experiments were conducted on Intel Xeon E5-2697 v3 2.60GHz (14 cores) with 64GB RAM. We include detail information about selection of hyperparameters for all the models in the next section.

Hyperparameters

For the LIBLINEAR library, used for the implementations of all SVBOP algorithms, as well as the baselines, we tuned two parameters: C – inverse of the regularization strength and \(\epsilon _{l}\) – tolerance of termination criterion. For SVBOP-HSG and the underlying H-NSW indexing method, we tuned four parameters: M – the maximum number of neighbors in the layers of H-NSW index, \(\textit{ef}_c\) – size of the dynamic candidate list during H-NSW index construction, and k – initial size of the query to H-NSW index and \(\textit{ef}_s\) – size of the dynamic candidate list during H-NSW index query, that both were always set to the same value. For balanced 2-means tree building, we tuned two parameters: l – the maximum number of leaves on the last level of a tree and \(\epsilon _{c}\) – §tolerance of termination criterion of the 2-means algorithm. We list all the hyperparameters we used to obtained all the results presented in Sects. 5.2 and 5.3 in Table 5.

Rights and permissions

About this article

Cite this article

Mortier, T., Wydmuch, M., Dembczyński, K. et al. Efficient set-valued prediction in multi-class classification. Data Min Knowl Disc 35, 1435–1469 (2021). https://doi.org/10.1007/s10618-021-00751-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-021-00751-x