Abstract

Background

A novel computer simulator is now commercially available for robotic surgery using the da Vinci® System (Intuitive Surgical, Sunnyvale, CA). Initial investigations into its utility have been limited due to a lack of understanding of which of the many provided skills modules and metrics are useful for evaluation. In addition, construct validity testing has been done using medical students as a “novice” group—a clinically irrelevant cohort given the complexity of robotic surgery. This study systematically evaluated the simulator’s skills tasks and metrics and established face, content, and construct validity using a relevant novice group.

Methods

Expert surgeons deconstructed the task of performing robotic surgery into eight separate skills. The content of the 33 modules provided by the da Vinci Skills Simulator (Intuitive Surgical, Sunnyvale, CA) was then evaluated for these deconstructed skills and 8 of the 33 determined to be unique. These eight tasks were used for evaluating the performance of 46 surgeons and trainees on the simulator (25 novices, 8 intermediate, and 13 experts). Novice surgeons were general surgery and urology residents or practicing surgeons with clinical experience in open and laparoscopic surgery but limited exposure to robotics. Performance was measured using 85 metrics across all eight tasks.

Results

Face and content validity were confirmed using global rating scales. Of the 85 metrics provided by the simulator, 11 were found to be unique, and these were used for further analysis. Experts performed significantly better than novices in all eight tasks and for nearly every metric. Intermediates were inconsistently better than novices, with only four tasks showing a significant difference in performance. Intermediate and expert performance did not differ significantly.

Conclusion

This study systematically determined the important modules and metrics on the da Vinci Skills Simulator and used them to demonstrate face, content, and construct validity with clinically relevant novice, intermediate, and expert groups. These data will be used to develop proficiency-based training programs on the simulator and to investigate predictive validity.

Similar content being viewed by others

References

Hung AJ, Zehnder P, Mukul Patil, Cai J, Ng CK, Aron M, Inderbir Gill, Desai MM (2011) Face, content and construct validity of a novel robotic surgery simulator. J Urol 186:1019–1025

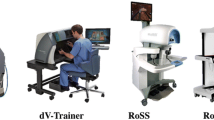

Kenny PA, Wszolek MF, Gould JJ, Libertino JA, Moinzadeh A (2008) Face, content, and construct validity of dV-Trainer, a novel virtual reality simulator for robotic surgery. J Urol 73:1288–1292

Sethi A, Peine WJ, Yousef Mohammadi, Sundaram CP (2009) Validation of a novel virtual reality robotic simulator. J Endourol 23:503–508

Lee JY, Mucksavage P, Kerbl DC, Huynh VB, Etafy M, McDougal EM (2012) Validation study of a virtual reality robotic simulator—role as an assessment tool? J Urol 187:998–1002

McDougall EM (2007) Validation of surgical simulators. J Endourol 3:244–247

Menon M, Shrivastava A, Tewari A, Sarle R, Hemal A, Peabody JO, Vallancien G (2002) Laparoscopic and robot assisted radical prostatectomy: establishment of a structured program and preliminary analysis of outcomes. J Urol 168:945–949

Lavery HG, Samadi DB, Thaly R, Albala D, Ahlering T, Shalhav A, Wiklund P, Tewari A, Fagin R, Costello AJ (2009) The advanced learning curve in robotic prostatectomy: a multi-institutional survey. J Robot Surg 3(3):165–169

Thijssen AS, Schijven MP (2009) Contemporary virtual reality laparoscopy simulators: quicksand or solid grounds for assessing surgical trainees? Am J Surg 199:529–541

Goh AC, Goldfarb DW, Sander JC, Miles BJ, Dunkin BJ (2012) Global evaluative assessment of robotic skills: validation of a clinical assessment tool to measure robotic surgical skills. J Urol 187:247–252

Acknowledgments

We would like to acknowledge Intuitive Surgical Incorporated for providing the robotic simulator on which to conduct this study.

Disclosures

The research design and implementation were done independently. Drs. Lyons, Goldfarb, Jones, Miles, Link, Dunkin, and Mr. Badhiwala do not have any conflicts of interest or financial ties to disclose.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Lyons, C., Goldfarb, D., Jones, S.L. et al. Which skills really matter? proving face, content, and construct validity for a commercial robotic simulator. Surg Endosc 27, 2020–2030 (2013). https://doi.org/10.1007/s00464-012-2704-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-012-2704-7